What’s Behind the Hype of AI Routers?

- Machine Learning, Technical Deep Dives

As AI systems grow more complex, one thing becomes increasingly clear: Not every query deserves the same computational treatment.

Why, then, do so many pipelines treat them that way?

Welcome to the rise of AI Routers-an emerging category of intelligent infrastructure designed to solve one of the most pressing challenges in today’s multi-model AI landscape: matching the right model to the right task.

What Is an AI Router?

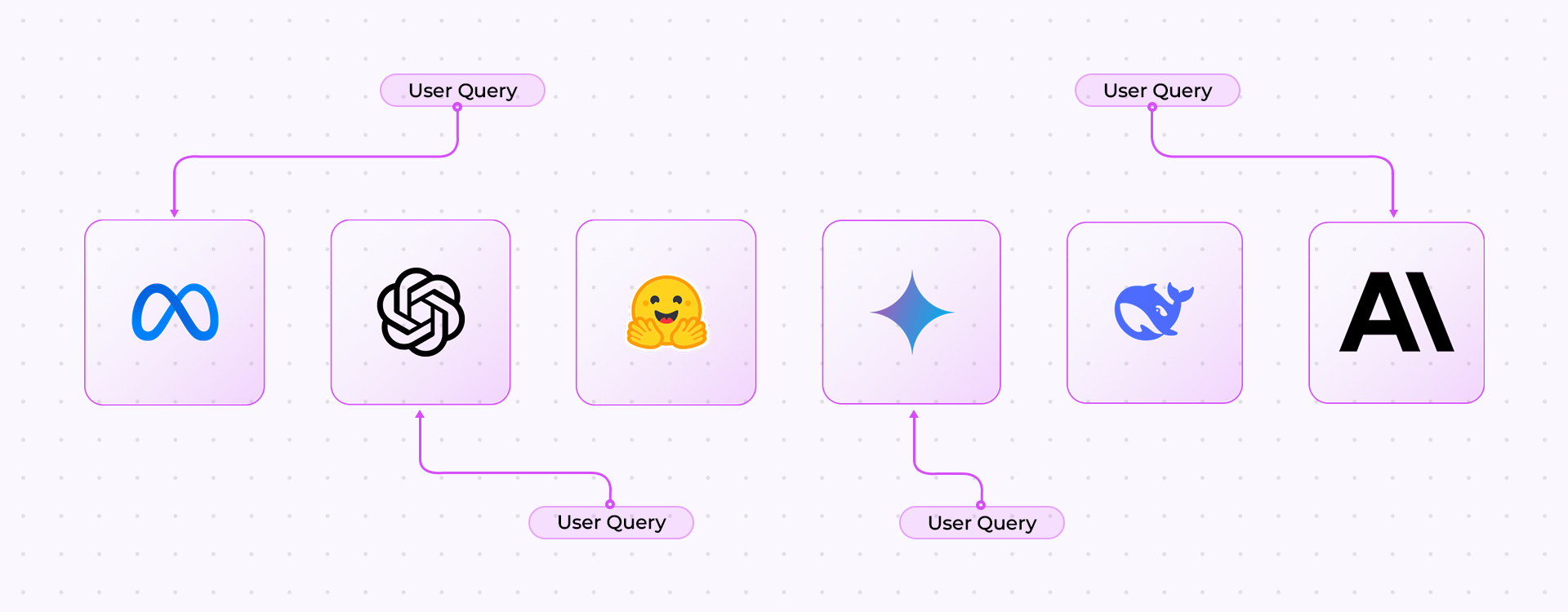

An AI Router is an orchestration layer that analyzes incoming user queries and determines which large language model (LLM), agent, or tool should handle the response. Instead of defaulting to a single monolithic model like GPT-4 or Claude for every task, AI Routers allow dynamic selection based on several factors:

-

Query context: What is being asked?

-

Performance needs: Who can respond fastest?

-

Cost constraints: Which model delivers good enough answers for less?

-

Accuracy benchmarks: Who has the best track record on similar tasks?

-

Privacy or compliance requirements: Which models are safe to use?

-

Energy efficiency: Which is less resource-intensive?

-

Task history and preferences: Which model performed better in the past?

In other words, AI Routers are the control towers of the modern AI stack, routing traffic efficiently to optimize speed, accuracy, and cost.

Why Are AI Routers Becoming Essential?

With the explosion of open-source models (like LLaMA 3, Mixtral, DeepSeek) and the increased availability of specialized fine-tuned agents, relying on a single LLM, has become both inefficient and expensive.

Modern applications often involve:

Query classification

Document search

Tool use

Context retrieval

Long-chain reasoning

Relying on one model for all of this leads to over-computation, latency spikes, and budget bloat.

AI Routers solve this by introducing multi-model workflows, where:

Prompt parsing & understanding:

The router must analyze intent and task type before routing.

Model benchmarking

It must “know” the strengths and weaknesses of every integrated model.

Latency overhead

The routing logic itself must be near-instantaneous.

Model warmups

Cold-start latency can nullify any benefit.

Lack of training data

There’s no standard dataset for training AI routers. Most are built manually.

Additionally, routers must be flexible across architectures (e.g., REST APIs, local inference, edge devices), and resilient to failure or fallback modes.

Who’s Building AI Routers Today?

Several tools and platforms have entered the space, but few offer complete routing infrastructure:

Tool

What It Does

Limitations

Agent routers & LLM selectors

Fragile logic at scale

Retriever fusion & node routing

Designed for RAG only

Basic tool selection

Manual and limited

Microsoft AutoGen

Agent orchestration with routing

Still experimental

Multi-model access

Routing is not automated

Multi-model access

Routing is not automated

Most solutions let you implement routing, but don’t offer the intelligence themselves. This gap makes AI Router infrastructure one of the next big frontiers in AI tooling.

The Rise of Multi-Model Workflows

As models diversify across modality, specialization, cost structure, and performance, multi-model workflows become not just useful but essential.

Today’s enterprise AI systems must:

-

Choose between hundreds of models

-

Dynamically configure them by task

-

Track performance and learn over time

Routing isn’t a side feature; it’s becoming the central nervous system for how intelligent systems will operate.

Final Thought: AI Routing is Not Hype, It’s Infrastructure

The future of AI won’t be built on single-model systems. It will be built on adaptive, cost-aware, intelligent routing.

Just as operating systems intelligently manage hardware resources, AI Routers will manage model resources, balancing compute, performance, and cost.

We’re still in the early days, but the pattern is clear:

AI Routing is the infrastructure backbone for the next generation of intelligent, scalable, multi-model systems.